Data Models (Nodes)

Data Models (Nodes)

The Reasoning Flows component for defining logical data entities and connecting them to the Reasoning Atlas.

Purpose

Data Models — also referred to as Nodes — are used to represent structured, logical data models integrated directly into Reasoning Flows. Unlike standalone data sources, these objects define semantic entities (e.g., customers, transactions, products) that can be referenced and reused by other components in the workflow.

By embedding Data Models into a project, teams maintain clarity, structure, and consistency across workflows while anchoring key business concepts within the logic of each process.

Where It Fits in Reasoning Flows

In the overall Reasoning Flows architecture:

- Extract & Load brings raw or external data into the system.

- Repository Tables register datasets for reuse.

- Transform & Prepare cleans, standardizes, and prepares data.

- AI & Machine Learning builds and trains intelligent models.

- Visual Objects turns processed data into reports or APIs.

- Data Models (Nodes) define the logical meaning and structure of data entities for use within Reasoning Flows.

- Reasoning Atlas connects Data Models to organizational context, semantics, and generative reasoning.

Goal: Data Models act as the semantic bridge between data pipelines and business concepts, ensuring every data flow is traceable, documented, and aligned with the enterprise knowledge graph.

Key Features

Defines Logical Data Models

Establishes structured entities (e.g., Customer, Product, Transaction) that are part of Reasoning Flows.

Reference for Downstream Objects

Serves as a centralized data contract — enabling downstream pipelines, APIs, or ML components to use consistent structures.

Promotes Modularity and Clarity

Encapsulates business logic within well-defined data entities, simplifying documentation and maintenance.

Ensures Consistency

Keeps schema definitions and transformations aligned across multiple workflows.

Recommended Use Cases

- Integrating business entities (like customers or orders) as part of a data transformation or AI pipeline

- Structuring and documenting how datasets interact across the workflow lifecycle

- Anchoring and tracing inputs, transformations, and outputs for governance and explainability

- Enabling semantic linkage between Reasoning Flows and the Reasoning Atlas for reasoning and generative AI

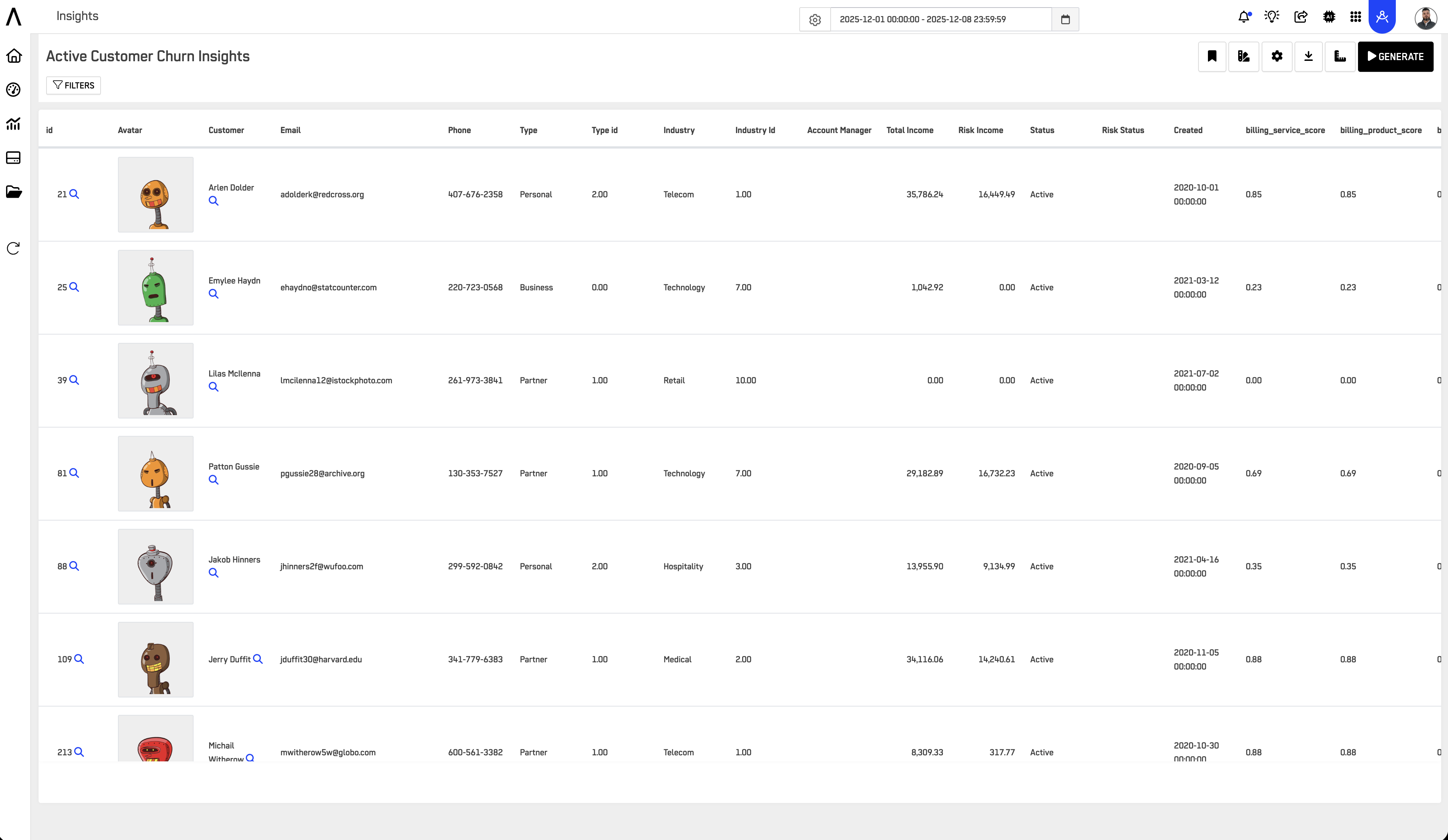

Visual Example

Example: A "Customer Model" Node defines customer-level attributes consumed by ML pipelines and exposed as a unified business entity.

Connection to Data Layers

Data Models should be built on properly classified data:

| Data Layer | Data Model Usage |

|---|---|

| RAW | Not recommended — clean data first |

| CLEAN | Acceptable for development and testing |

| GOLD | Primary source for production Data Models |

| OPTIMIZED | Used when Data Models require specific performance tuning |

Important: Only GOLD and OPTIMIZED tables are indexed in the Knowledge Catalog for reasoning and LLM-powered exploration.

Best Practices

Define Data Models early in project design to serve as anchors for business concepts.

Reuse the same Node across multiple workflows for consistency. Avoid creating duplicate definitions for the same business entity.

Always link Data Models to source tables from the CLEAN or GOLD layers for traceability.

Document entity relationships and data lineage in the Reasoning Atlas.

Use clear naming conventions for discoverability:

dm_customer— Customer data modeldm_product— Product data modeldm_transaction— Transaction data model

Related Documentation

-

Reasoning Atlas Overview

Understand how Data Models connect to organizational knowledge and semantics. -

Transform & Prepare

Learn how data is standardized before being modeled. -

Repository Tables

See how base data is registered for reuse. -

ARPIA Data Layer Framework

Follow best practices for naming and tagging data layers (RAW → CLEAN → GOLD → OPTIMIZED).

Updated 2 months ago