Data Governance - Data Quality

Data Quality Metrics are critical indicators used in assessing the condition and effectiveness of data within an organization. These metrics provide a quantitative basis for evaluating the health of data across various dimensions, such as accuracy, completeness, consistency, and timeliness. Understanding and actively managing these metrics is fundamental for ensuring that data meets the standards required for operational processes, analytics, and decision-making.

-

✅ Accuracy: This metric measures the correctness and precision of data in comparison to real-world values or verified sources of truth. Accuracy metrics help identify errors like incorrect data entries, misspellings, and outdated information. For instance, in a customer database, accuracy would involve checking that addresses, names, and contact details are correct as per the latest available information.

-

🧩 Completeness: Completeness metrics evaluate whether all expected data is present in the dataset. They identify missing values or records that are expected but absent. Completeness is critical in scenarios like regulatory reporting where missing data can lead to non-compliance, or in customer service where incomplete client profiles can affect service quality.

-

🔄 Consistency: Consistency metrics check for uniformity and logical coherence of data across different datasets or within the same dataset over time. This includes verifying that data formats and values follow predefined norms across different data stores, ensuring that there are no contradictory records within a dataset. An example would be ensuring that all dates in a transaction log use the same format and that account balance figures align across transaction and summary tables.

-

⏱️ Timeliness: Timeliness measures the delay between the occurrence of an event and the representation of that event in the database. This metric is essential in environments where real-time or near-real-time data is crucial, such as in monitoring systems, where delays in data updates can lead to missed opportunities or risk incidents.

-

🔒 Reliability: This metric assesses the extent to which data is consistent and correct across multiple sources and over time. It helps gauge the trustworthiness of data sources and the processes used to collect, store, and manage data.

-

🧩 Uniqueness: Uniqueness metrics are used to detect and prevent duplicate entries in data tables. Ensuring that each record is unique where required (such as a user ID or email address in a user account table) is crucial for maintaining data integrity.

-

✔️ Validity: Validity metrics check data against relevant rules and constraints to ensure it conforms to specific syntax (format, type, range) and semantics (meaning and business rules). For example, a validity check might confirm that postal codes match the format and location appropriate to a country or region.

-

🔗 Integrity: Data integrity metrics focus on the correctness and completeness of relationships within data, such as foreign keys and other constraints that maintain the logical relationships between tables.

Managing these metrics typically involves routine data quality assessments, using tools that automatically measure and report on these dimensions. Organizations often implement dashboards and alerts to monitor data quality metrics continuously, allowing for prompt detection and correction of data quality issues. High data quality across these dimensions ensures robust analytics, accurate reporting, enhanced customer satisfaction, improved decision-making, and overall operational efficiency.

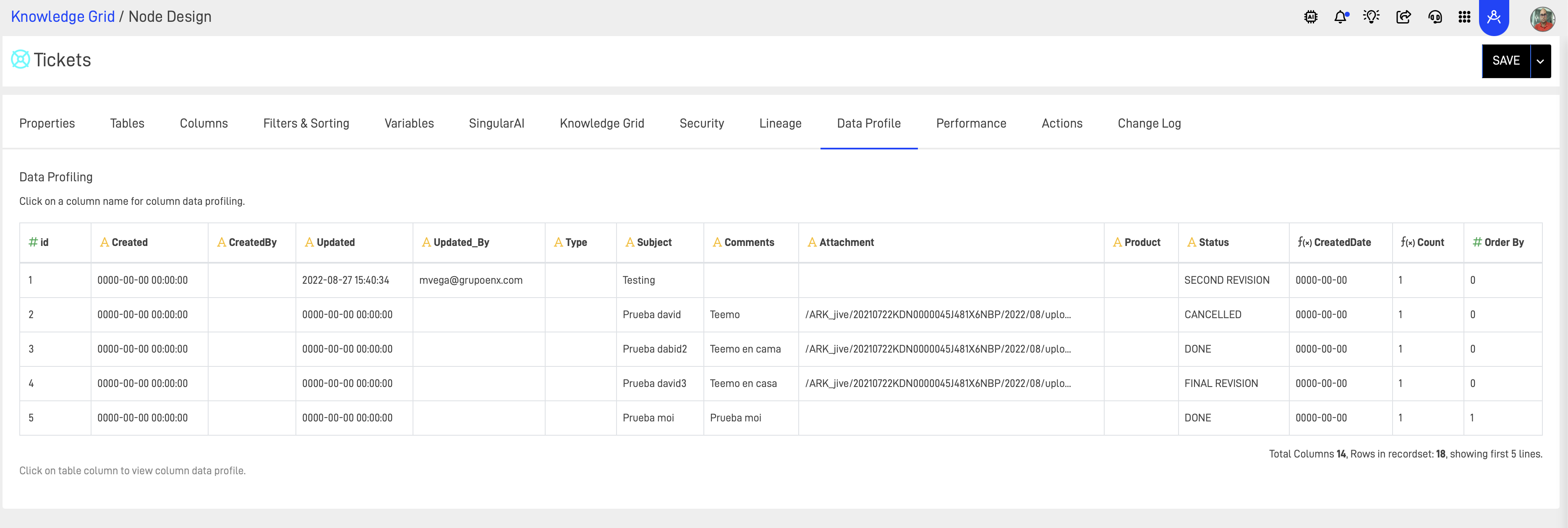

On AP, the platform features Data Profiling for each Column contained in the KG Node. In the following image, we can see the Data Profile tab within the settings of a Node:

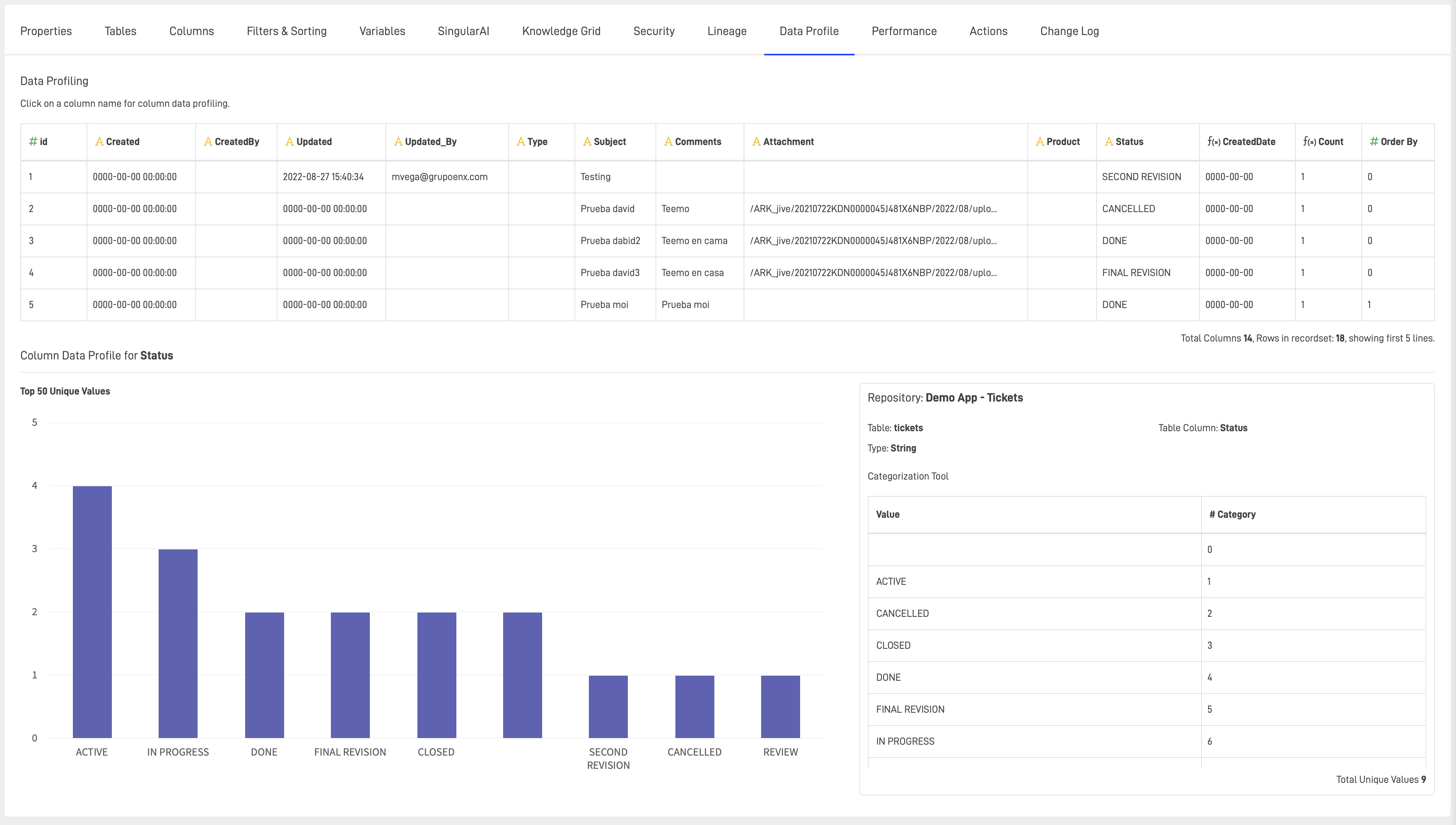

Here, you can analyze each column by selecting it. For example, if we data profile the Status column, the data profile will display as follows:

This tool provides data profiling for columns, which is useful for data quality validations and more.

Updated over 1 year ago