Comprehensive Overview of Models Compatible with the Arpia Platform: AutoML and LLM Models for Generative AI

This document provides a detailed overview of the models compatible with the Arpia Platform, specifically focusing on AutoML through AutoGluon and the selection of Large Language Models (LLMs) utilized for Generative AI applications. These models enable a wide range of AI-driven capabilities, from data analysis and prediction to semantic search and advanced natural language processing.

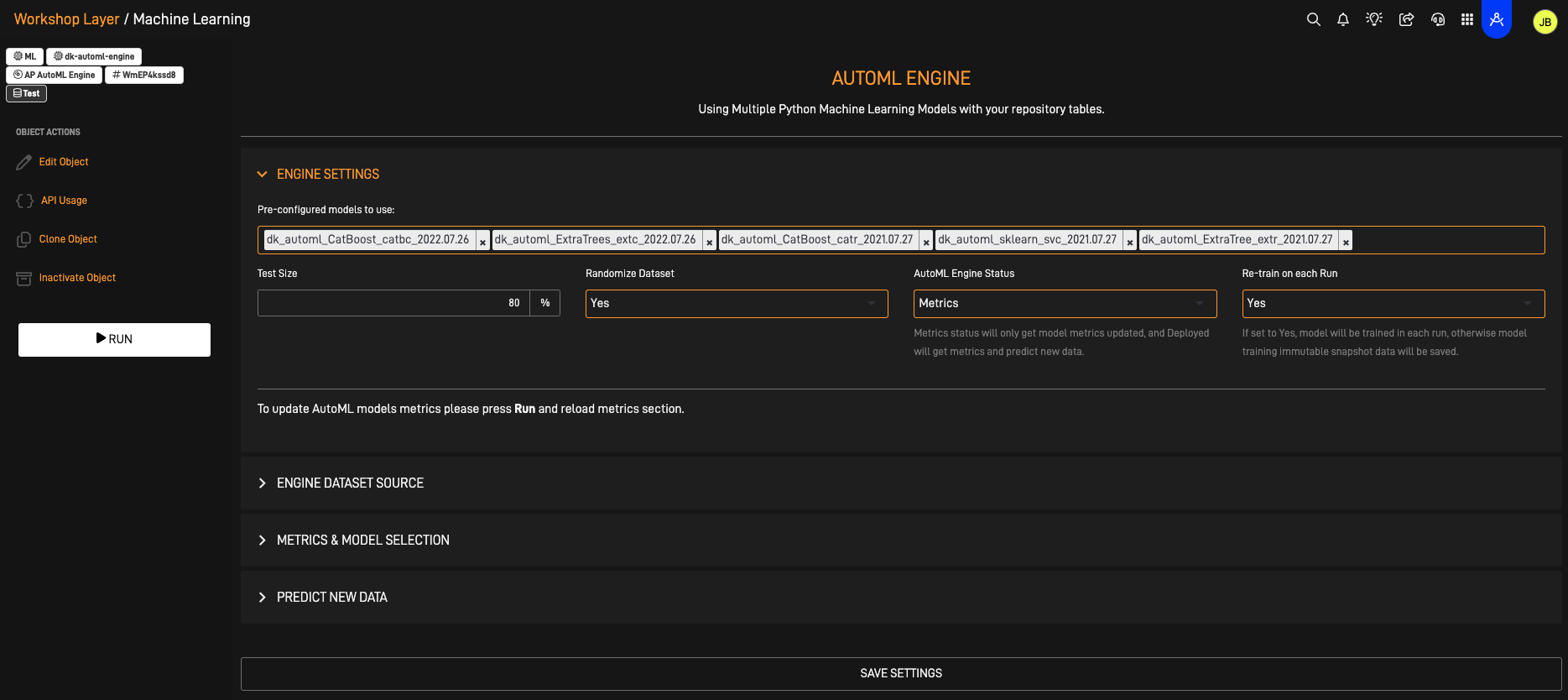

🧠 AutoML Capabilities: AutoGluon and YOLO Models

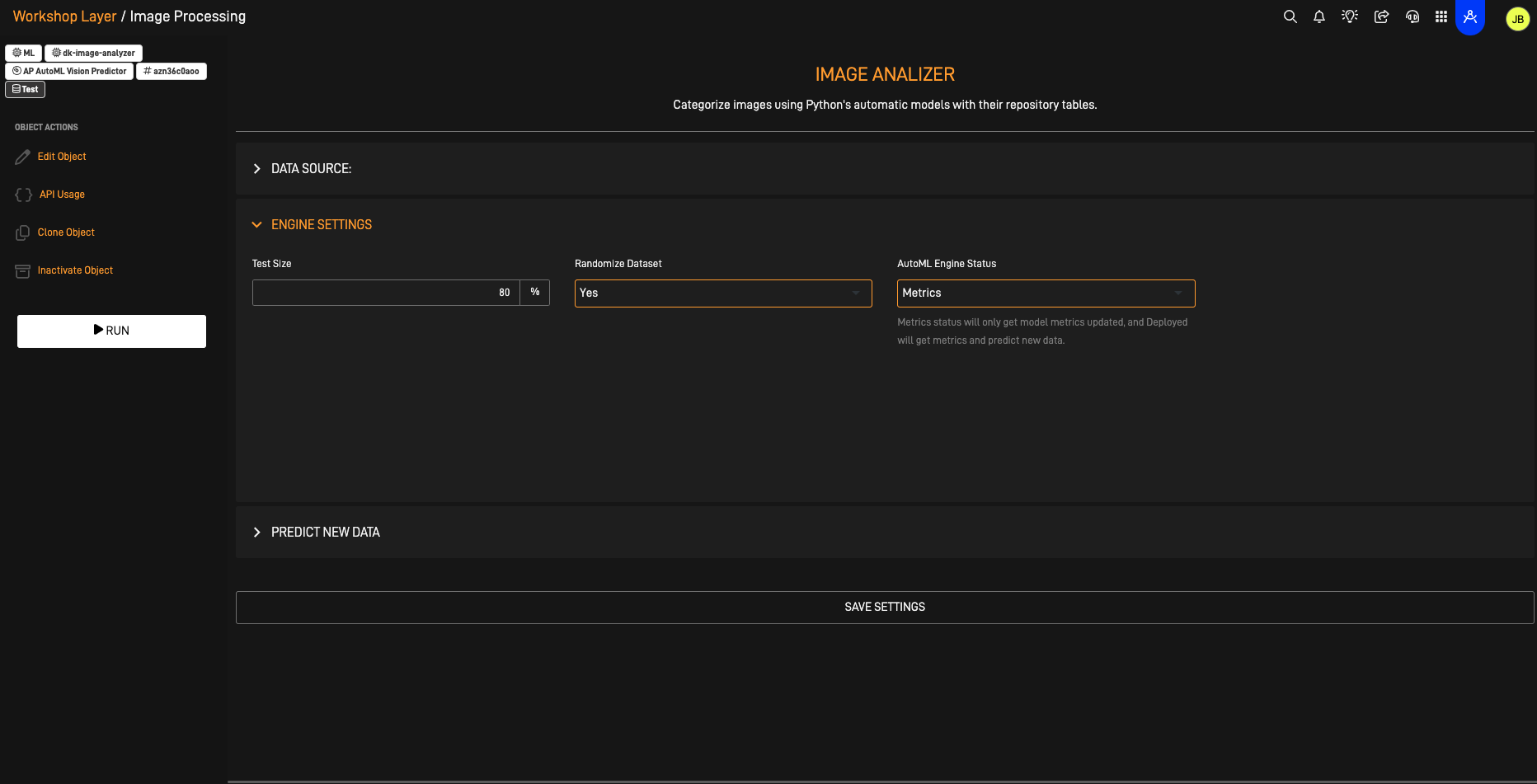

Arpia’s AutoML functionality leverages AutoGluon and can be extended through YOLO for specialized, high-speed applications in image processing and object detection. These models cater to different AI needs, with AutoGluon excelling in classification tasks and YOLO in real-time object detection. Through Arpia Managed Services, clients can access configurations tailored to complex scenarios, such as high-speed detection in camera, drone, and radar footage.

🔍 AutoGluon Models

- Purpose and Strengths: AutoGluon is designed for general-purpose machine learning tasks, excelling in structured data (tabular), image classification, and time series forecasting. It is known for:

- Model Selection and Ensemble Capabilities: AutoGluon automatically chooses optimal models and creates ensembles to boost predictive accuracy and robustness.

- Image Classification: Ideal for scenarios where high-level image categorization is needed, such as quality control or content tagging.

- Time Series Analysis: Supports trend forecasting and predictive maintenance with sequential data.

- Advanced Configurations for Complex Use Cases:

- Model Compatibility and Conversion: For real-time object detection needs, AutoGluon models can be converted to ONNX format, enabling compatibility with high-speed inference engines (e.g., NVIDIA TensorRT).

- Inference Optimization: Converted models can undergo optimization with tools like TensorRT or OpenVINO for high-speed deployment, achieving latency reductions similar to YOLO’s capabilities.

🚀 YOLO Models

- Purpose and Strengths: YOLO (You Only Look Once) models are optimized for real-time object detection, suitable for identifying and locating objects within images or video streams. YOLO is an excellent choice for applications that demand rapid and continuous processing, such as live surveillance or real-time analytics.

- Hybrid YOLO-AutoGluon Pipeline:

- For scenarios requiring both detection and high-accuracy classification, YOLO can serve as a front-line detector, quickly identifying and cropping regions of interest within images. These regions can then be passed to an AutoGluon classifier for refined categorization, balancing YOLO’s speed with AutoGluon’s detailed accuracy.

- Hybrid YOLO-AutoGluon Pipeline:

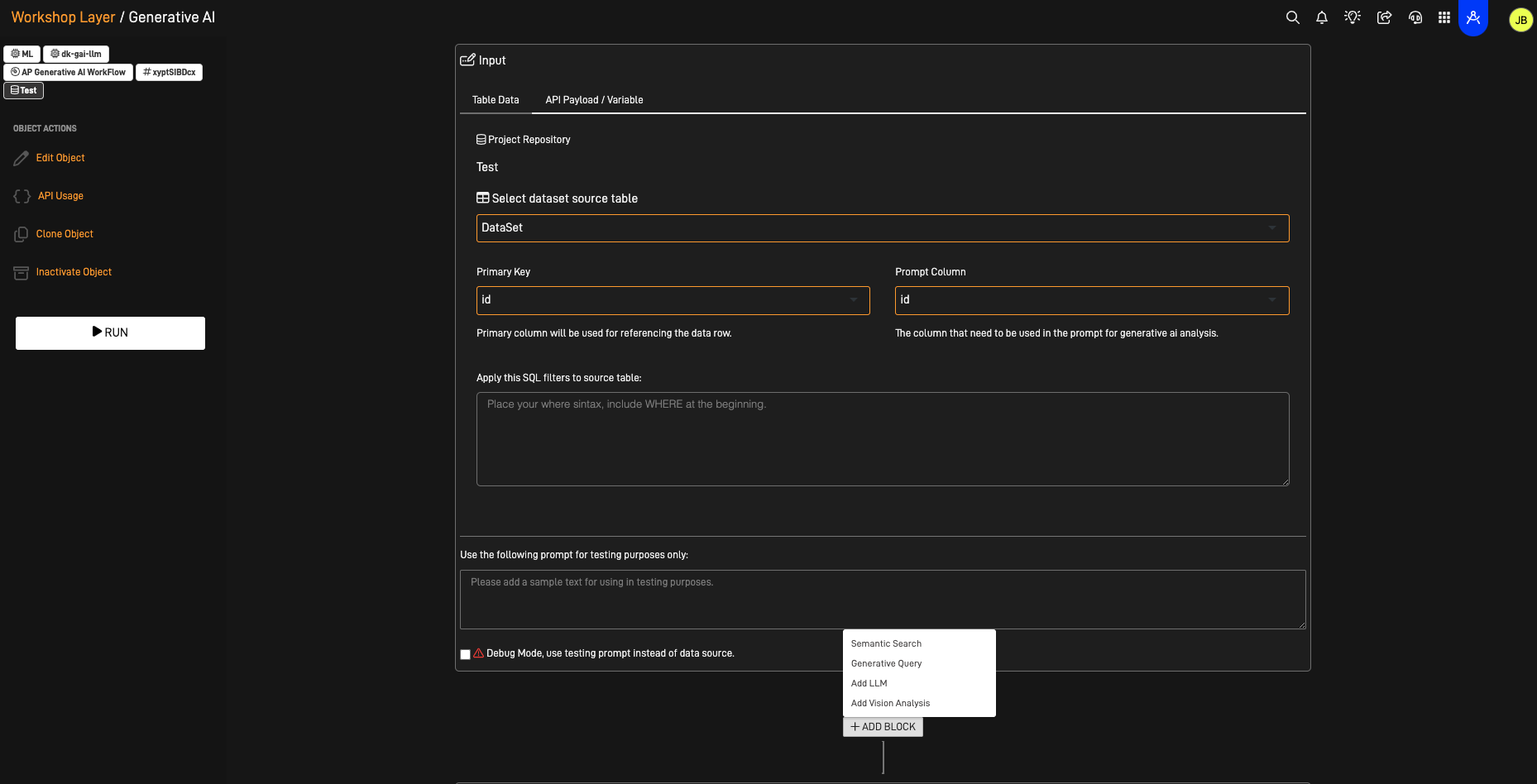

💬 Large Language Models (LLMs) for Generative AI

Arpia’s platform includes a range of LLMs for generative and analytical tasks, supporting both locally hosted and cloud-hosted models. This flexibility allows Arpia to meet privacy and performance requirements, enabling organizations to choose models suited to each task within a workflow.

🌐 Supported LLM Models

-

Jina (Locally Hosted): Specializing in semantic search through embeddings, Jina is ideal for privacy-sensitive applications, particularly for multi-modal data involving text and image embeddings.

-

Llama3 (Locally Hosted): A general-purpose model excelling in contextual understanding, question answering, and dialogue-based tasks, hosted locally for secure implementations.

-

OpenAI GPT Models (Cloud-Hosted): Including GPT-4, 4o, and 4omini, these models offer flexibility in content generation, structured data creation, and conversational AI.

-

Claude by Anthropic (Cloud-Hosted): Models like Claude Sonnet provide robust interpretability and safe AI practices, ideal for controlled, high-quality responses in conversational and generative scenarios.

- Available Claude models:

- Claude 3 Haiku: Fast and efficient, ideal for daily tasks.

- Claude 3 Sonnet: Most intelligent, well-suited for complex tasks.

- Claude 3 Opus: Excels in writing and nuanced content creation.

- Available Claude models:

For the latest details on model capabilities, visit the official Anthropic website or API documentation.

🔍 Capabilities in Workflow Configurations

- Semantic Search: Embedding-based search with models like Jina to retrieve relevant data points, enhancing the Knowledge Grid layer.

- Content Generation and Summarization: OpenAI’s and Claude’s models generate structured content, summaries, and responses, improving data-driven workflows.

- Prompt Engineering with Structured Responses: Ensures consistent data formats for downstream applications with contextually aware, structured responses.

- Contextual Recommendations: Uses insights from prior workflow steps to produce relevant recommendations, ideal for decision support or customer interaction.

⚙️ Flexible Workflow Model Selection in Arpia

Arpia enables users to configure workflows incorporating multiple models, allowing for an optimal blend of:

- Speed: Selecting models known for rapid responses in real-time interactions.

- Reasoning and Precision: Leveraging advanced models like GPT-4 for complex analysis and nuanced language understanding.

📋 Use Case Example

A workflow could involve YOLO for rapid object detection from live footage, followed by AutoGluon for detailed classification of detected regions. Simultaneously, Jina could perform semantic search on historical data, with OpenAI’s GPT-4 generating a summary report that combines real-time analysis with historical insights.

This configuration provides high precision, real-time performance, and adaptability, suited for data-intensive operations in a secure environment.

🔔 Conclusion

Supporting both AutoML (AutoGluon) and LLM (Generative AI) models, Arpia provides a robust, flexible platform for advanced data processing, predictive analytics, and real-time intelligence. This comprehensive model ecosystem integrates seamlessly into workflows, delivering data-driven insights for the modern enterprise.

Updated over 1 year ago