Data Governance - Usage Statistics

Data governance platforms often track how frequently and by whom data is accessed. This includes information on the most queried tables, the type of queries run (e.g., read, write, update, delete), and the performance of these queries (such as execution times).

Usage statistics in the context of a Data Governance Platform provide critical insights into how data within an organization is accessed and utilized. These metrics are fundamental for optimizing data management strategies, ensuring efficient use of resources, and improving overall data access patterns.

What are Usage Statistics?

Usage statistics refer to the collection, measurement, and analysis of data pertaining to the access and use of information stored in databases, data lakes, or any other data repositories. This information typically includes details about which tables and databases are accessed, the frequency of access, the types of operations performed (e.g., read, write, update, delete), and the users or applications making those accesses.

Importance of Usage Statistics

-

Resource Optimization: By monitoring which data is accessed most frequently, organizations can optimize their storage and computing resources. For instance, frequently accessed data can be moved to faster, more accessible storage solutions, whereas rarely used data can be archived in cheaper, slower storage.

-

Performance Tuning: Usage statistics help identify bottlenecks and performance issues in data access patterns. This allows database administrators to fine-tune indexes, queries, and database configurations to improve performance and reduce latency.

-

Capacity Planning: Understanding long-term trends in data access helps in anticipating future needs and planning for capacity upgrades. This ensures that the data infrastructure scales appropriately with the growing demands of the organization.

-

Security and Compliance: Tracking who accesses data and what operations they perform is critical for security audits and compliance reporting. It helps in detecting unauthorized access and ensuring that data usage complies with legal and regulatory standards.

-

User and Application Profiling: By analyzing usage patterns, organizations can identify which departments or applications rely most heavily on certain data sets. This aids in understanding the impact of data on business processes and prioritizing data governance initiatives accordingly.

-

Cost Management: In cloud-based environments, where data storage and access can incur significant costs, usage statistics are vital for managing expenses. They help in identifying costly operations and optimizing them to reduce overall expenditure.

Collecting Usage Statistics

The collection of usage statistics typically involves several components of the data infrastructure:

- Database Management Systems (DBMS) often provide built-in tools or logs that record access patterns and query performance.

- Data Access Layers in applications can also be instrumented to log details about data operations.

- Custom Monitoring Tools and integration with enterprise monitoring solutions can provide a more comprehensive view by aggregating data across multiple systems.

Analyzing and Reporting

Data gathered from monitoring usage must be analyzed to transform raw data into actionable insights. This often involves:

- Dashboards: Real-time monitoring of key metrics to provide an at-a-glance view of data usage.

- Alerting Systems: Automated alerts that notify administrators of unusual access patterns or potential security breaches.

- Reports: Detailed reports that summarize usage over time, helping in strategic planning and operational reviews.

By leveraging usage statistics effectively, organizations can not only ensure their data governance is proactive but also enhance the overall efficiency and security of their data management practices.

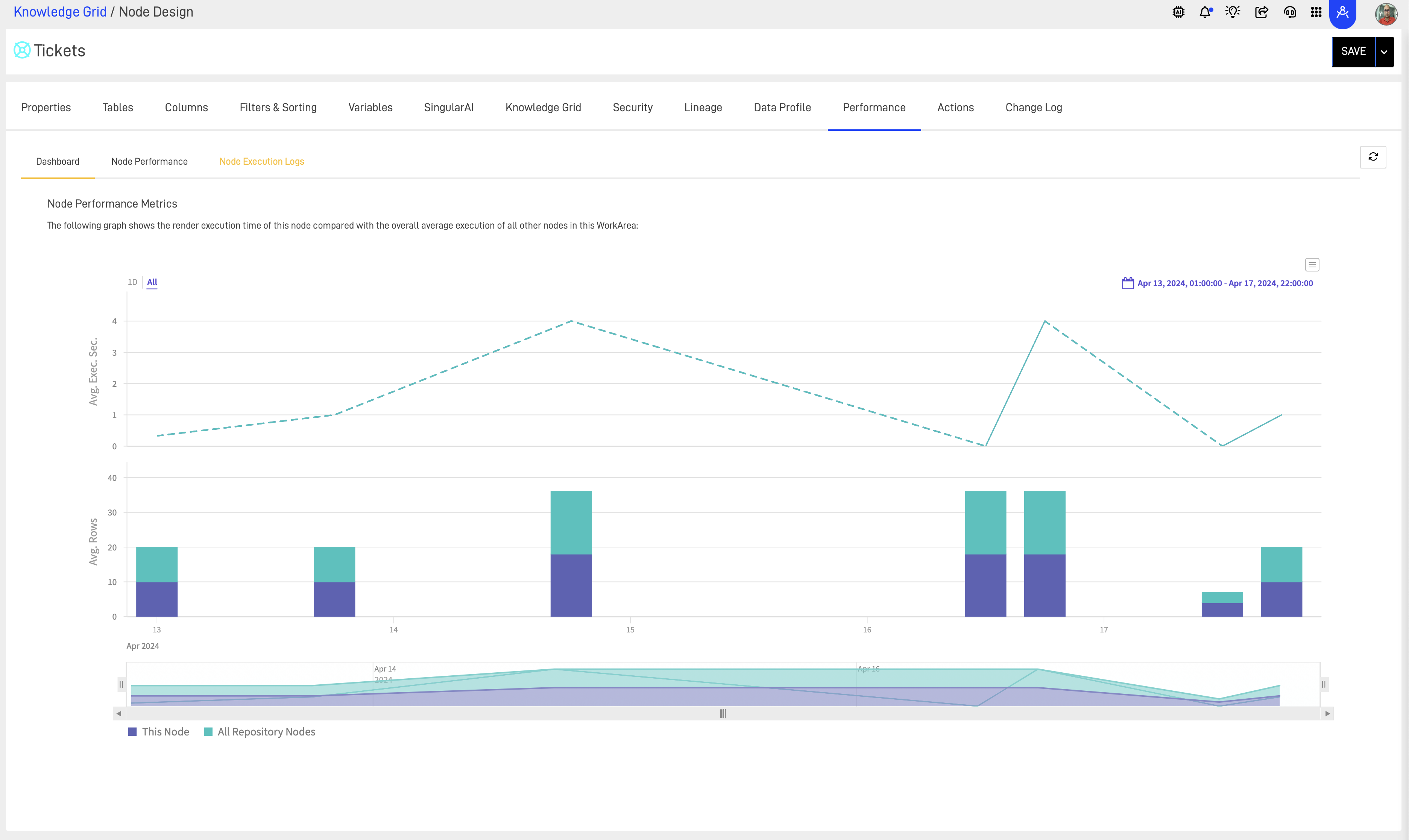

Usage Statistic at the KG Nodes in AP

You may access usage on any KG Node in AP inside the Node settings and in the Performance Tab, you may see a detailed usage information for example:

In this section we can see the performance of the node and amount execution in seconds and rows delivered. Also we can check node performance checking if its optimized and also a execution log where we can analyze the last 3 months for performance bottle necks or errors in data delivery.

Updated over 1 year ago