AI Apps

AI Apps Overview

The Reasoning Flows component for orchestrating complete, data-driven applications.

Purpose

AI Apps represent complete data-driven applications within Reasoning Flows. They act as the central orchestration point where related components — such as data preparation tools, notebooks, machine learning models, APIs, and visualizations — are logically grouped into a unified structure.

By creating an AI App, teams can structure projects into cohesive, deployable units, simplifying management, navigation, and execution across the Reasoning Flows ecosystem.

Where It Fits in Reasoning Flows

Within the Reasoning Flows architecture:

- Extract & Load ingests and structures data.

- Repository Tables register datasets for reuse.

- Transform & Prepare cleans and formats data for analytics.

- AI & Machine Learning builds and trains predictive or generative models.

- Visual Objects delivers insights through notebooks, dashboards, or APIs.

- Data Models (Nodes) define logical entities and metadata relationships.

- AI Apps brings all of these components together into a single, operational application.

Goal: AI Apps are the execution and delivery layer — turning Reasoning Flows projects into deployable, interactive applications.

Key Features

Application Context

Defines a complete data application inside Reasoning Flows, combining ETL pipelines, ML models, APIs, and visual assets.

Logical Grouping

Groups related development objects into a single project scope — enabling consistent orchestration and versioning.

Workflow Management

Serves as a control hub for scheduling, dependency tracking, and execution order.

Deployment Integration

Can be included in deployment processes to define the boundaries and logic of an entire application.

Recommended Use Cases

- Building and deploying a data product or AI-powered service (e.g., forecasting app, semantic search API)

- Structuring multi-component solutions that involve data pipelines, model training, and inference endpoints

- Managing application-level dependencies across diverse Reasoning Flows objects

- Creating a logical hierarchy for reusable assets within enterprise-scale environments

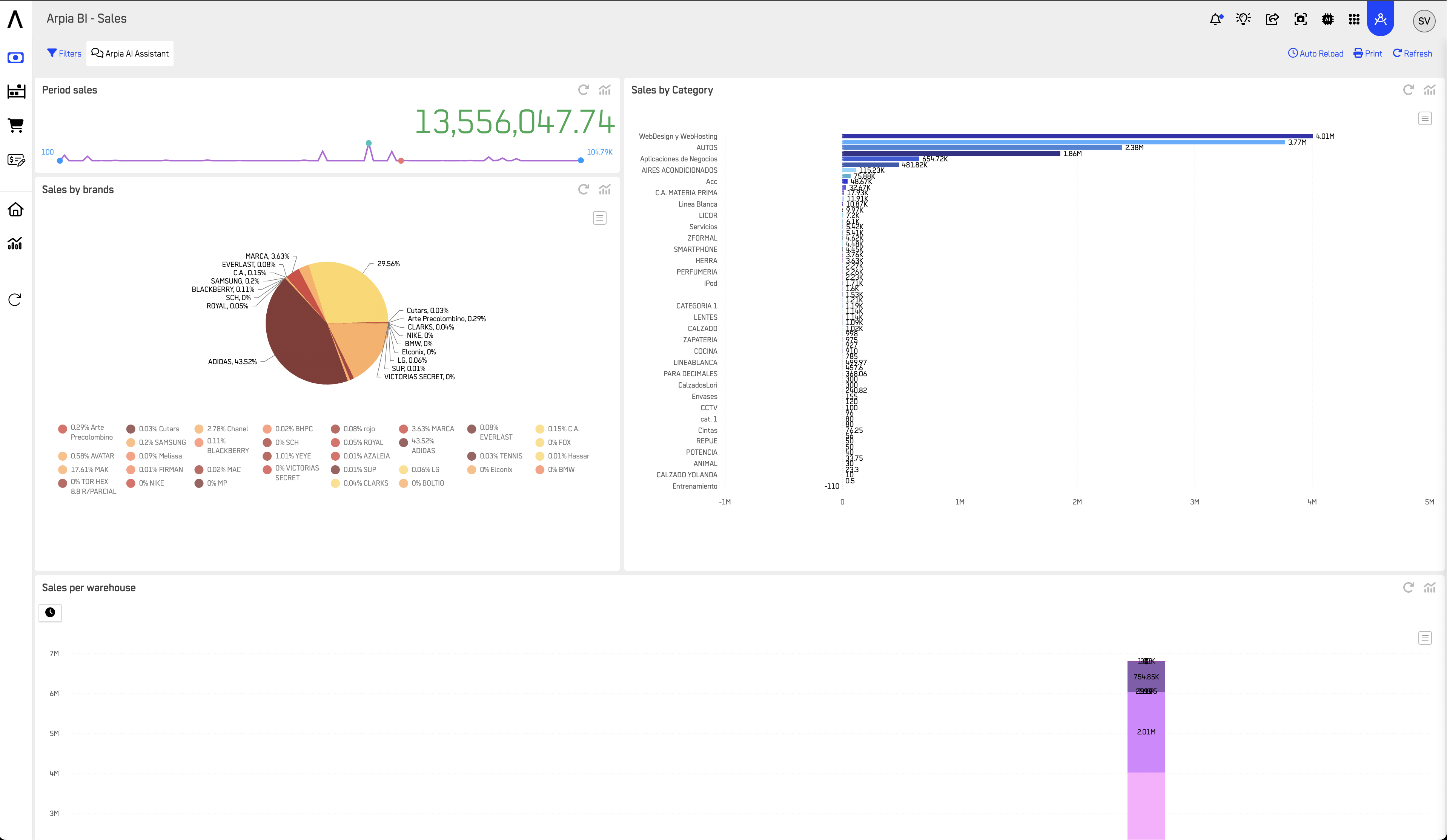

Visual Example

Example: An AI App connecting ETL pipelines, prepared tables, AutoML models, and API endpoints into a single deployable unit.

Example AI App Structure

A typical AI App might include the following components:

Sales_Forecasting_App/

│

├── Extract & Load

│ └── AP DataPipe Engine - MySQL (daily sales import)

│

├── Transform & Prepare

│ ├── AP Prepared Table (clean sales data)

│ └── AP SQL Code Execution (aggregate to monthly)

│

├── AI & Machine Learning

│ └── AP AutoML TimeSeries GPU Engine (forecast model)

│

├── Visual Objects

│ ├── ARPIA Python Notebook (analysis)

│ └── Python 3.8 Advanced ML & Plotly (dashboard)

│

├── Data Models (Nodes)

│ ├── dm_sales

│ └── dm_forecast

│

└── Integrations

├── AP Notification Engine (weekly report)

└── AP Web-Hook Sender (BI refresh trigger)

Naming Conventions

Use clear, business-context naming for AI Apps:

| Pattern | Example | Use Case |

|---|---|---|

{Domain}_{Function}_App | Sales_Forecasting_App | Domain-specific applications |

{Product}_App | Customer360_App | Product-focused applications |

{Team}_{Project}_App | Finance_BudgetAnalysis_App | Team-specific projects |

Note: AI App names use PascalCase or underscores for readability. This differs from table naming conventions (

snake_case_lowercase).

Best Practices

Use AI Apps as the top-level structure for projects — each major product or workflow should have its own AI App.

Keep all related objects grouped under one AI App for traceability. Avoid splitting related ETL, ML, and visualization components across multiple AI Apps.

Name AI Apps clearly using business context so stakeholders can identify the purpose at a glance.

Use Data Models (Nodes) to document linked data entities and dependencies within the AI App.

Integrate notifications and webhooks to automate reports, monitoring, and event triggers.

Register the AI App and its outcomes in the Reasoning Atlas for organization-wide visibility and governance.

Related Documentation

-

Visual Object Overview

Learn how to create notebooks and visualization layers within an AI App. -

AI & Machine Learning Overview

Integrate trained models and inference engines into your AI App. -

Transform & Prepare

Prepare and clean data before inclusion in an application. -

Data Models (Nodes)

Define logical structures and link them to your AI App. -

Reasoning Atlas Overview

Document and connect your AI App to organizational knowledge.

Updated 2 months ago