How to Transfer Securely Data for ETL to ARPIA Repositories

This guide explains how to securely extract data from remote databases (e.g., Oracle in OCI) into the Arpia Platform using a temporary, encrypted OpenVPN tunnel. It includes step-by-step instructions for setting up the VPN on the client side and configuring an ETL job using the AI Workshop with Arpia Codex and SQLAlchemy. Ideal for secure, automated data transfers without exposing your database to the public internet.

📌 Overview

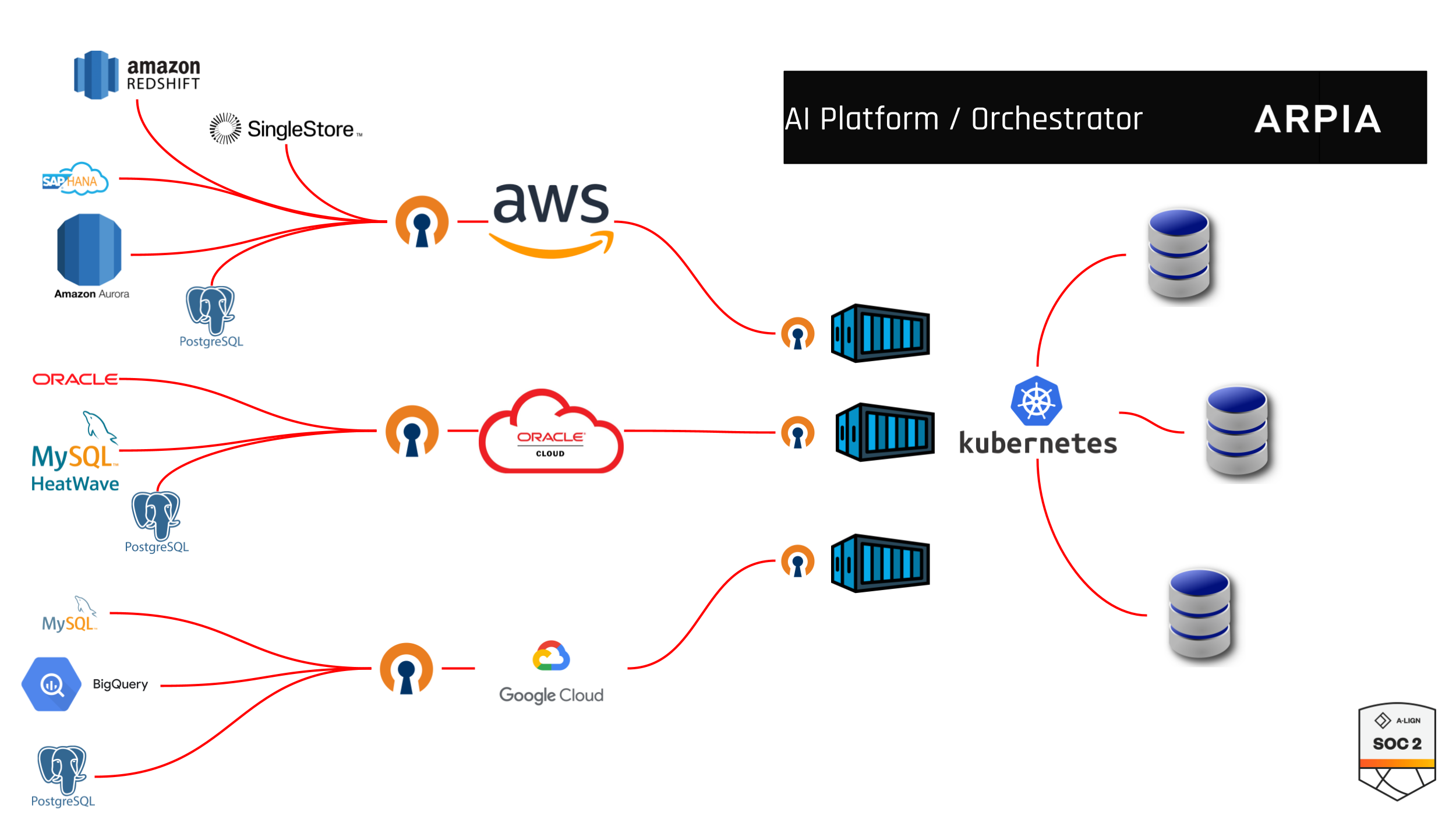

ARPIA enables secure data extraction from customer environments by leveraging OpenVPN and a Python-based ETL engine embedded within our AI Workshop.

This guide provides step-by-step instructions to set up a private, encrypted VPN tunnel and execute remote ETL jobs using SQLAlchemy, abstracting your database interactions securely into Arpia’s infrastructure.

🛠️ Requirements on Your Side

To ensure data privacy and network security, please follow the steps below:

-

Deploy a VPN Bridge (VM)

- Provision a lightweight VM within the same subnet as your destination database in your private network.

- Recommended OS: Ubuntu 20.04 or later.

-

Install OpenVPN Server

- Install OpenVPN using the official community script:

curl -O https://raw.githubusercontent.com/angristan/openvpn-install/master/openvpn-install.sh chmod +x openvpn-install.sh sudo ./openvpn-install.sh

During setup, choose:

- Protocol: UDP

- Port: 1194

- DNS: Any public provider (Google or Cloudflare recommended)

Generate a Client Profile

- After installation: Generate a new client profile named arpia-client.

# ./openvpn-install.sh

Configure Firewall in your Cloud Infrastructure

- Allow UDP port 1194 for Arpia’s source IP (we will share it with you securely).

- Allow internal access from the VPN bridge to your destination database.

⚙️ On the Arpia Side: AI Workshop Setup for Secure ETL

Once you have your .ovpn file, proceed with the following:

Step 1: Create a Workshop Object

- Go to your AI Workshop project inside the Arpia platform.

- Create a new object.

- Choose Type: Extract & Load

- Select Development Environment: Python 3.12 DataPipe Engine

Step 2: Upload the VPN Profile

- Inside the object, create a new file named: client.ovpn

- Paste the full contents of the .ovpn profile generated in your VM.

Step 3: Connect & Code Using Arpia Codex

- Use Arpia Codex (our AI Dev Assistant) to help generate the required ETL code.

- Your script should:

- Connect to the VPN tunnel (Arpia runtime handles this when client.ovpn is present)

- Use SQLAlchemy to connect to the remote DB over the tunnel.

- Extract and load data into your Workshop Object Arpia Repository.

⚙️ Recommended Minimum Specifications

| Resource | Specification |

|---|---|

| vCPUs | 2 vCPUs (Intel/AMD with AES-NI support preferred) |

| RAM | 2–4 GB RAM |

| Disk | 20 GB SSD (minimum) |

| Bandwidth | 5–10 Mbps sustained bandwidth |

| OS | Ubuntu 20.04 LTS or 22.04 LTS |

🔐 Security Best Practices

- SSH access should be secured with key-based authentication only.

- The VM firewall should only allow ports required for OpenVPN and SSH (default deny all others).

- Run OpenVPN under a non-root user, and monitor logs for unauthorized access attempts.

Remember

The OpenVPN VM should have access to private networks and a public ip accesible from ARPIA Platform Networks.

Secure IP Access

Please contact ARPIA Platform support for getting the public ips to be whitelisted to have access to the openvpn virtual machine for better security.

📘 SQLAlchemy provides a robust abstraction layer, allowing you to switch between Oracle, MySQL, PostgreSQL, and other engines with minimal changes.

Step 4: Request a Ready-to-Use Template

- Use the support chat inside Arpia and request: “ETL Workshop Object Template with VPN for Remote Extraction”

- This will install a working object you can adjust using Codex.

🛡️ Security & Session Lifecycle

- The VPN tunnel is spawned dynamically and destroyed after task completion.

- All data is encrypted during transit.

- Your credentials and .ovpn file are stored securely in memory only during job execution.

✅ Best Practices

- Create a dedicated database user with read-only access.

- Monitor VPN usage logs from your side.

- Limit the VPN bridge’s scope to internal resources only.

🤝 Need Assistance?

Our team is available to help you:

- Set up the VPN bridge

- Validate the .ovpn file and test the connection

- Configure automation via our ETL API

Updated 2 months ago