How to use the AI Assistant screen?

Step-by-Step Guide: AI assistant

This guide provides a comprehensive walk-through on using the AI Assistant screen. This powerful tool leverages generative artificial intelligence to create an intuitive chat interface that utilizes our Knowledge Node as its core context.

With its advanced capabilities, the chat can answer complex questions, retrieve precise information from Nodes, and streamline data access, ultimately enhancing decision-making and operational efficiency.

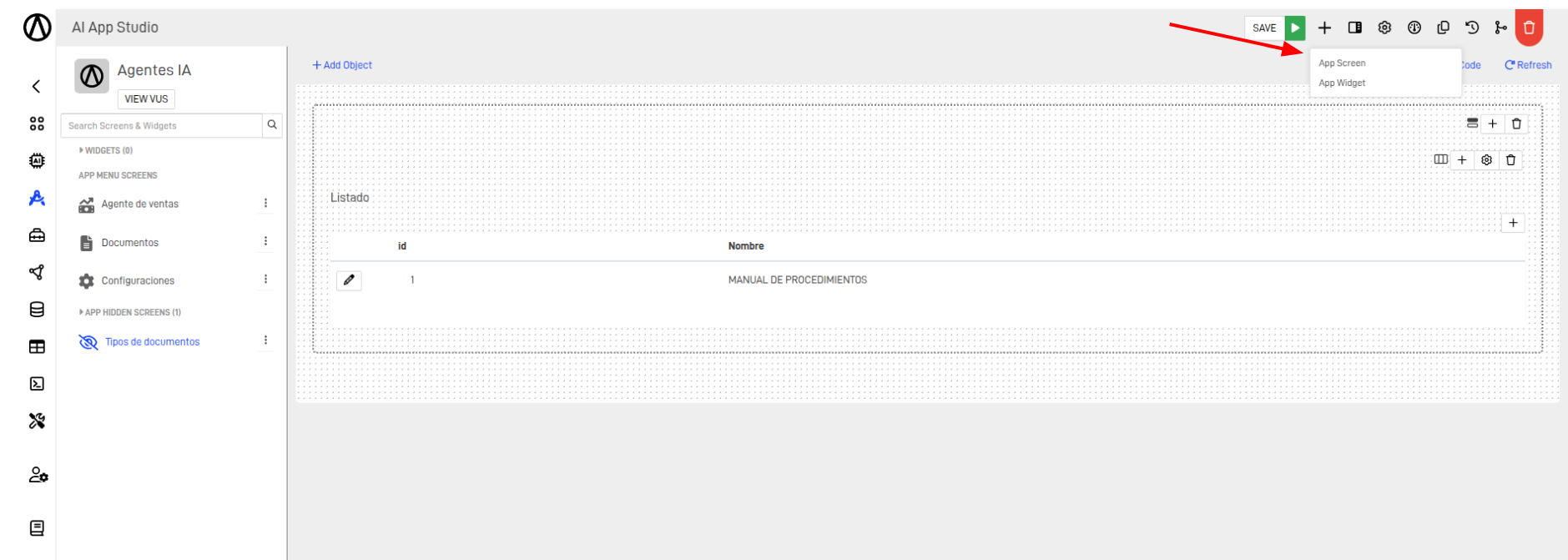

Step 1: Create a New App Screen in the Target Application

- Click the add Screen Button and select add App Screen:

- Fill the form and Select an AI Assistant Screen Type:

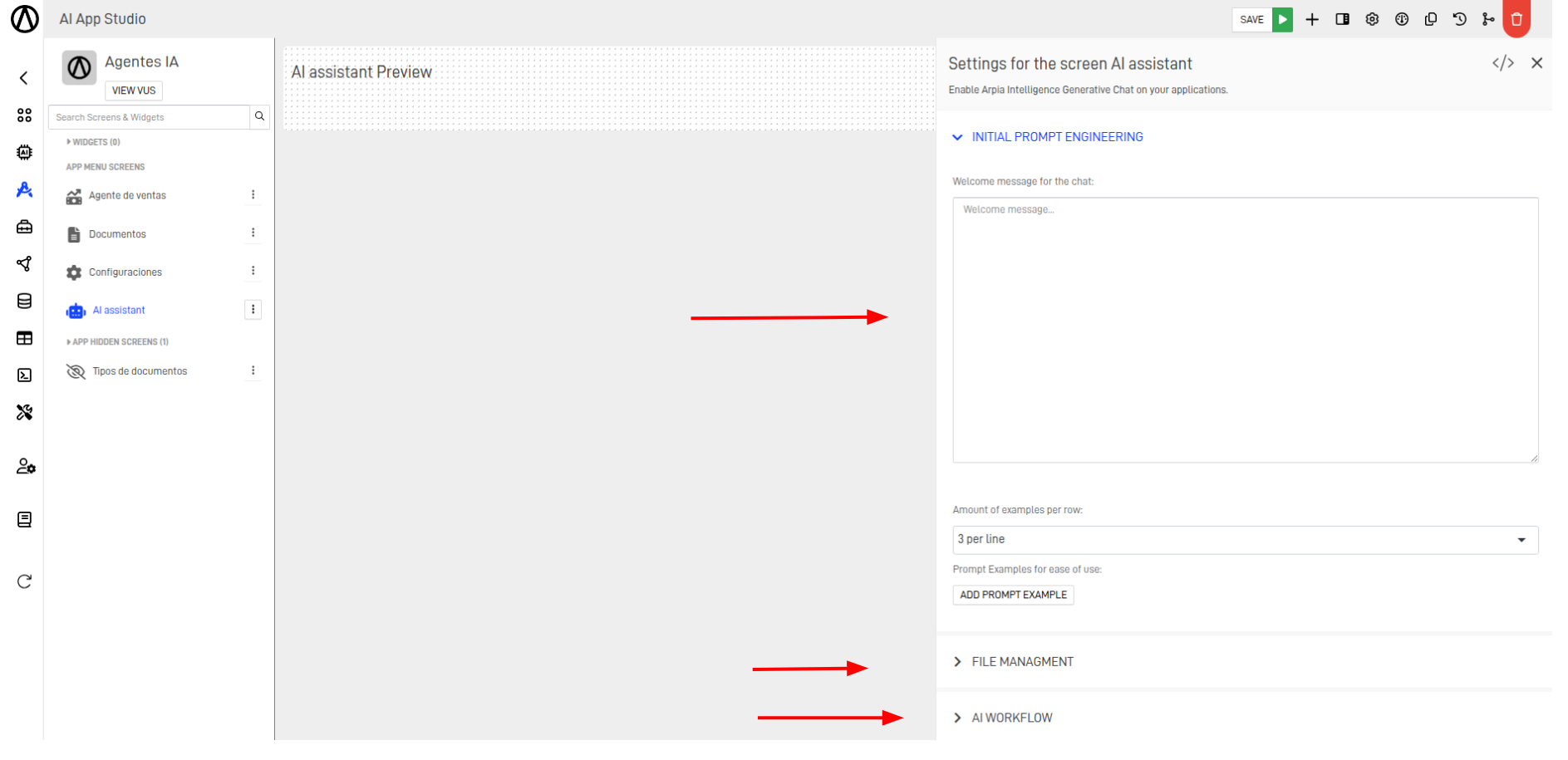

- The object has 3 blocks:

These foundational blocks set up the system. In later sections, we'll provide a detailed guide on configuring each component for optimal performance.

Step 2: Configure the "Initial Prompt Engineering" settings

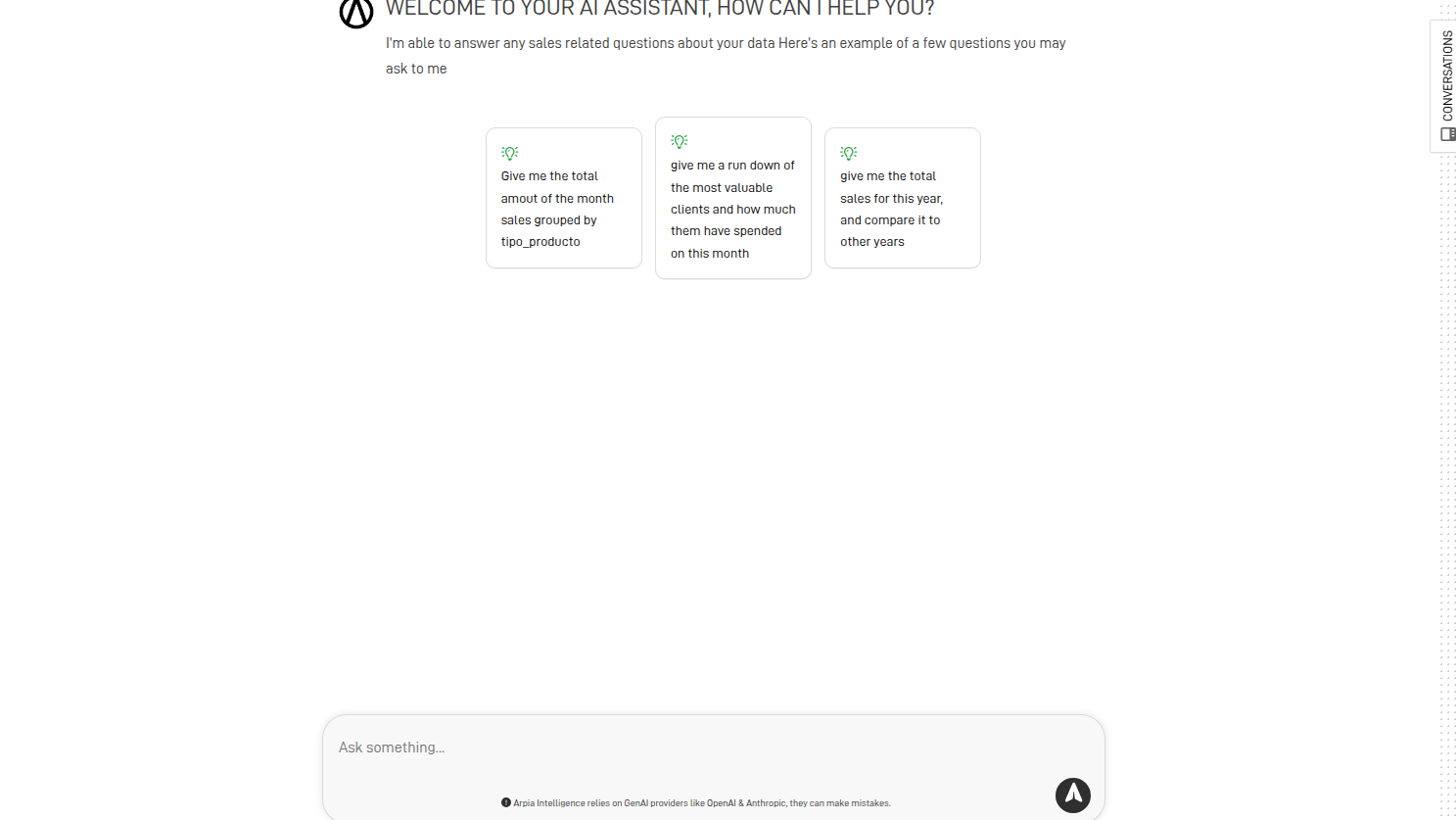

This settings tab allows customization of the initial information displayed in the chat.

Settings fields:

- Welcome message for the chat: Customize the initial message shown by the Singular AI chat.

- Amount of examples per row: Define how many prompt examples are displayed per row, ranging from 1 to 3.

- Prompt Examples for Ease of Use: Include predefined prompts to guide users on possible questions, enhancing user experience and understanding.

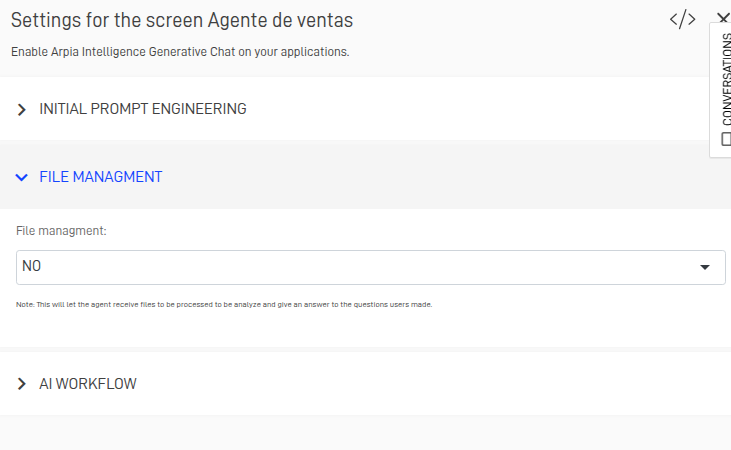

Step 3: Configure "File Management" settings

This feature enables users to upload files and interact with the AI Assistant by asking questions based on the uploaded content. The assistant can analyze documents, extract insights, and provide context-aware responses to streamline data retrieval and decision-making.

Step 4: Configure "AI Workflow" settings

These settings allow customization of the workflow that prompts and information will follow. The configuration significantly impacts the output shown to the user and operates using blocks.

it has three blocks:

Add Tool Block

Add LLM

This block enables integration with a Large Language Model (LLM) for text generation.

- Prompt Engineering: Customize prompts using output variables.

- Include Prompt History: Utilize past prompts for enhanced context.

- Model: Select the LLM model.

- Max Tokens: Define output length.

- Temperature: Adjust creativity levels (0 to 2).

- Output Variable: Name of the variable storing the LLM output.

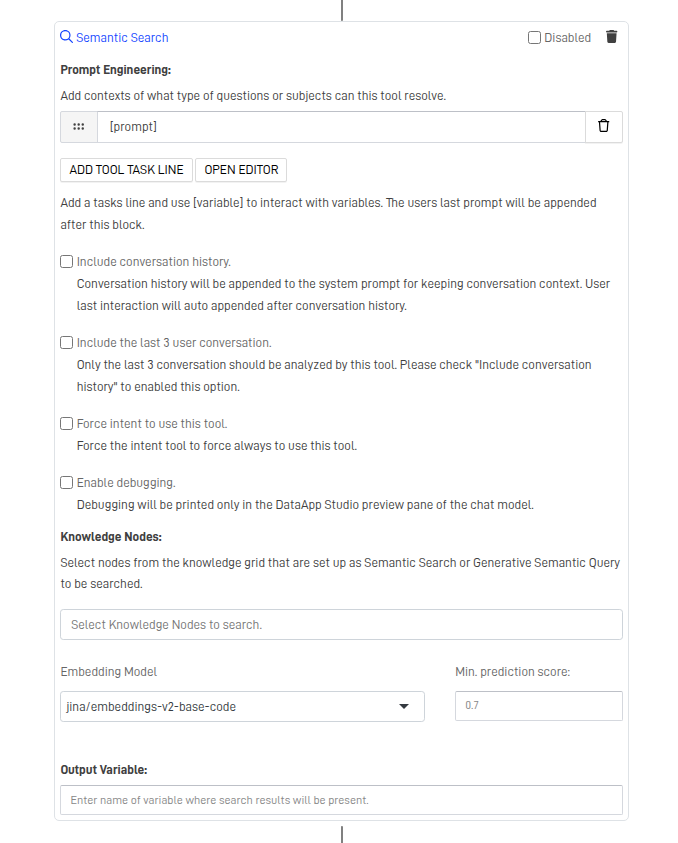

Semantic Search

This block processes the meaning of words and phrases to retrieve relevant information.

- Input Variable: Name of the variable or prompt representing the user input.

- Knowledge Nodes: Select nodes used for semantic search.

- Embedding Model: Choose an embedding model for AI performance.

- Min. Prediction Score: Define the minimum score for considering a result relevant.

- Output Variable: Name of the variable storing output results.

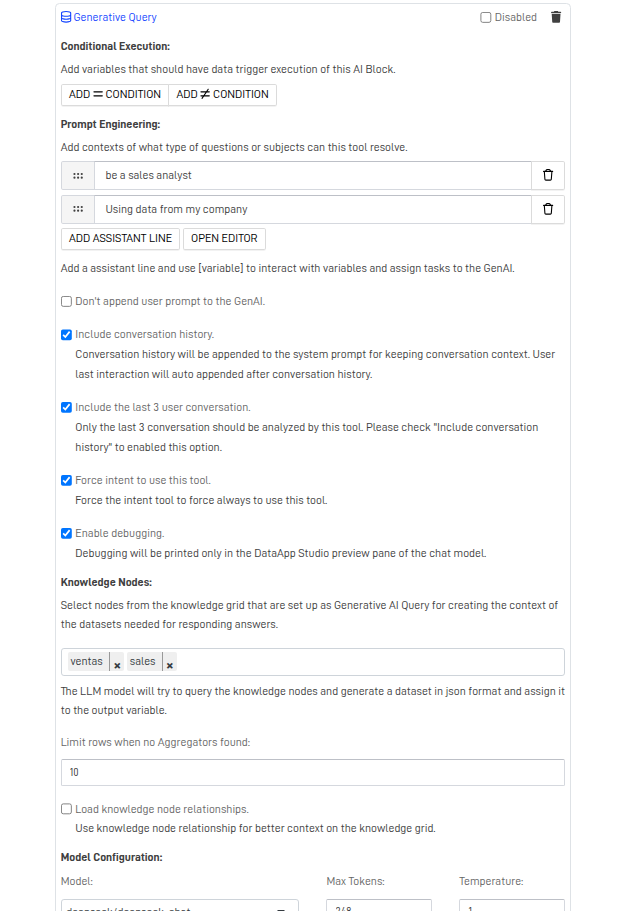

Generative Query

This block generates responses based on user input and knowledge nodes.

- Prompt Engineering: Customize prompts using output variables.

- Include User’s Last Prompt: Use the last user prompt for context.

- Include Prompt History: Utilize conversation history for response generation.

- Knowledge Nodes: Select nodes to define response context.

- Limit Rows When No Aggregators Found: Restrict row count when no aggregators are set.

- Model: Select the AI model used for response generation.

- Max Tokens: Define the maximum length of generated responses.

- Temperature: Adjust response predictability vs. creativity (0 to 2).

- Dataset Variable: Name of the variable storing the dataset.

- Embedded Chart: Name of the variable storing embedded charts.

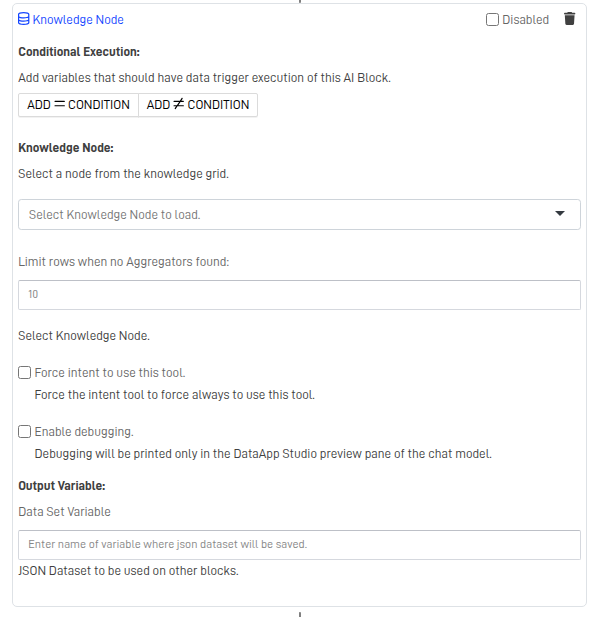

Knowledge Node

This block embeds a Node and generates a JSON file as a variable, which can be used for context or further filtering within the flow.

- Select a Node from the Knowledge Grid: The user can select any Node that has been added to the Neural Network.

- Enable Debugging: Provides feedback about the internal processes.

- Output Variable: Creates a JSON object that can be used through the flow.

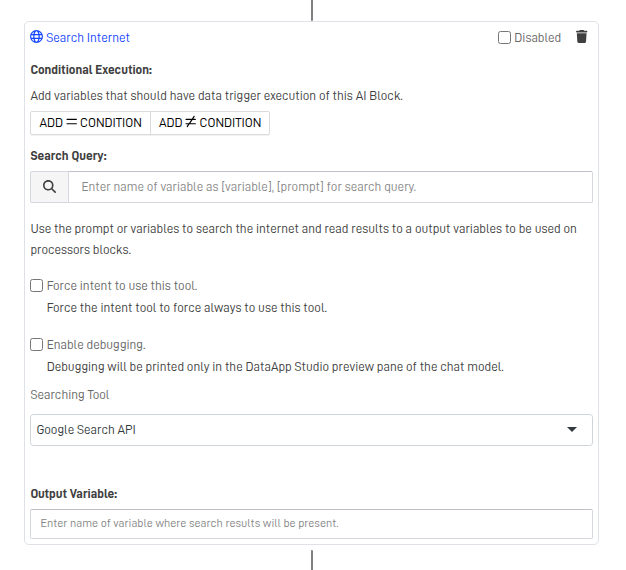

Search Internet

Can fetch data from the internet based on a given prompt or a workflow variable. The answer is saved in an output variable for use in the processor block.

Add Processor Block

This section processes various workflow outputs to generate the final response displayed in the chat. It can provide answers in natural language, visuals, or a combination of both.

AI Data Graphing

Uses variables from previous steps in the flow to generate an output containing the code for creating plots.

- Input Variable:uses a var from the flow to be used as data set

- Model configuration:Enables the user to select from a wide pool of models the one that will generate the answer, set the tokens to receive and the creativity of the responses based on the data

- Output Variable:Contains the code to execute the plots

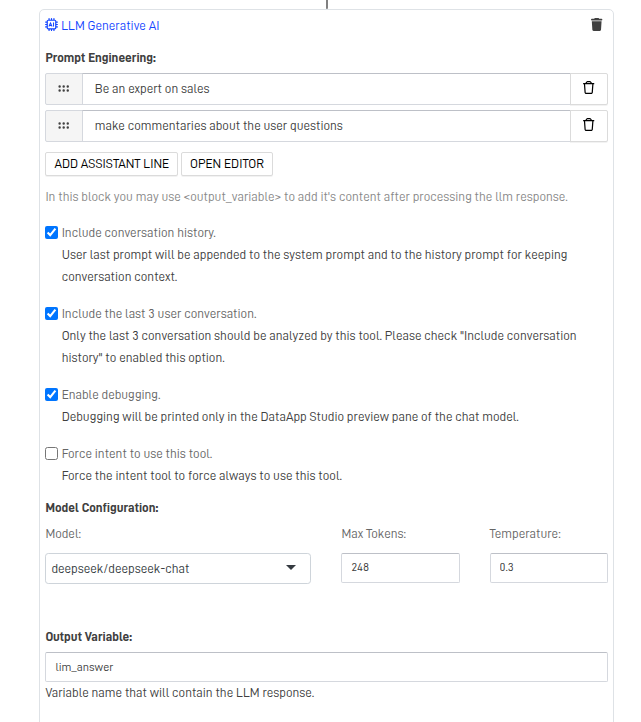

LLM Generative AI

It generates the answer to the user can be tuned using prompts, adjusting the temperature and giving context from previous conversations

- Prompt Engineering:Format the answer the user will receive based on prompts

- Model Configuration:Enables the user to select from a wide pool of models the one that will generate the answer, set the tokens to receive and the creativity of the responses based on the data

- Output Variable:Save LLM into a variable

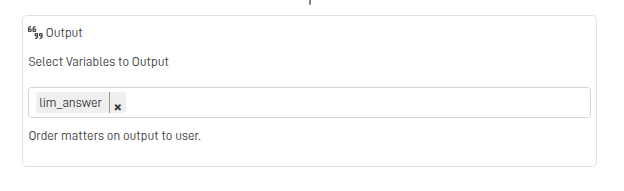

Output Block

Selects the variable used to respond to the user based on the initial prompt and workflow processes. The order in which workflow variables are selected affects how the answers are displayed.

Updated 12 months ago